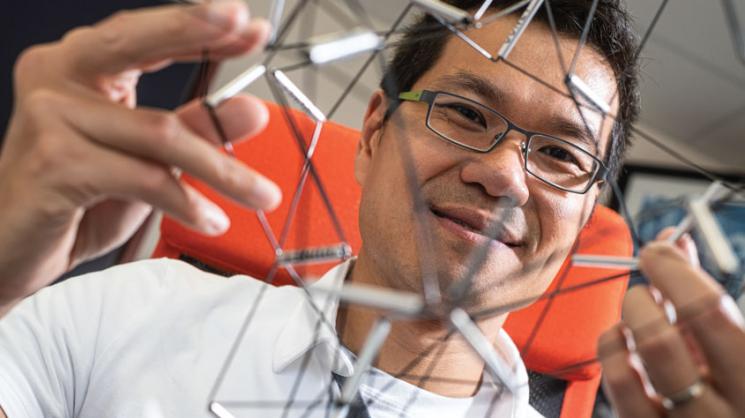

About 10 years ago, Eugene Ng began to notice a pattern emerging in his research.

“Things were just too slow. The amounts of data people were working with were enormous. Their hardware and software weren’t doing the job. Increasingly, I was working with new ways to handle more data, and do it faster and more reliably,” said Ng, professor of computer science (CS) and of electrical and computer engineering.

Since then, Ng and his research team have devised numerous strategies that enable networks to adapt their structures dynamically, to accelerate and optimize the transmissions of large data sets, as well as algorithms and software for efficient management of the technologies. In 2018, for example, his research team introduced ShareBackup, in which backup switches are shared network-wide for repairing failures. The strategy permits networks to quickly recover full capacity while leaving applications untouched.

The standard method for dealing with switch failure was to reroute the flow of data to a different line. Because networks’ connecting servers have many paths, data centers detour the flow around the blockage. The alternate route becomes congested with too much data and its flow is severely disrupted.

“Shared backup switches in data centers take on the network traffic in fractions of a second after a software or hardware switch failure. Traffic is redirected by a change in the network structure,” Ng said. ShareBackup has recorded a failure-to-recovery time of 0.73 milliseconds.

Another project is even more ambitious. With the aid of a National Science Foundation grant, Ng created a customized, energy-efficient optical network that streamlines the flow of data to supercomputing clusters. Ng was the principal investigator on the project called BOLD, short for “Big-data and Optical Lightpaths-Driven networked systems.”

“Research produces mountains of data, more than ever before, and sometimes there’s no efficient way to process it. BOLD takes advantage of optical data-networking switches. They have more capacity than the conventional electronic switches used in internet data centers.

“We needed new approaches to network control software, operating systems and applications so that they can keep up with the faster network. Optics is appealing to industry because it’s energy-efficient, scalable and nonintrusive to users,” Ng said.

Another NSF grant, this one for $1.2 million, helped Ng develop distributed programming methods for analyzing streaming data. With Ang Chen, assistant professor of CS at Rice, Ng took advantage of programmable elements in the various components that store and deliver data to customers.

“Previously, the processing was done at the server, without any processing or computation along the path. Our goal is to change that. All of the switches, routers and other components between users and data servers can become an active part in managing and analyzing big data,” Ng said.

In June, Ng was in South Korea to attend the Korean-American Kavli Frontiers of Science symposium, sponsored by the U.S. National Academy of Sciences and the Korean Academy of Science and Technology.

“I talked to neuroscientists and other people who were complaining that their software was working too slowly. I sympathized with them. That’s exactly the problem we’re trying to solve,” he said.